从源码看dirtyCOW(脏牛漏洞)

先把脏牛的poc代码贴出来

1 | |

然后分析write函数写入/proc/self/mem返回的fd源码执行过程

write()

SYSCALL_DEFINE3()

当调用write系统调用时会先调用SYSCALL_DEFINE3(在fs\read_write.c),也就是下面这个函数,sys_write()获取一些参数后调用vfs_write()进行真正的写入

1 | |

vfs_write()

这是虚拟文件系统提供的通用的文件写入操作, 本身就是一个__vfs_write()的包裹函数

1 | |

__vfs_write()

这个函数主要就是根据文件对象调用其内部的write()方法

1 | |

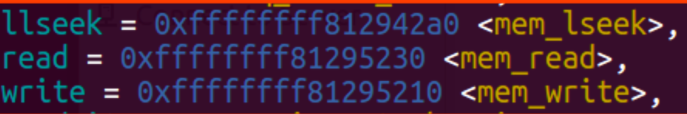

mem_write()

mem_write()(在fs\proc\base.c)会调用mem_rw()

1 | |

mem_rw()

mem_rw()首先根据/proc/self/mem这个文件对象的私有数据区域, 找到其映射的是哪一个虚拟地址空间, 然后在内核中申请了一个临时页作为内核缓冲区

1 | |

access_remote_vm()

是__access_remote_vm()的包裹函数

1 | |

__access_remote_vm()

主要分为两部分, 首先调用get_user_pages_remote()把页面锁定在内存中, 从而可以直接访问

1 | |

上面函数的核心就在与怎么把别的进程的页面锁定在内存中的,所以看看get_user_pages_remote的实现

get_user_pages_remote()

1 | |

__get_user_pages_locked()

由于locked设置为NULL, 因此get_user_pages_locked()设置flags, 调用get_user_pages()就直接返回了, 不会进入VM_FAULT_RETRY的逻辑

1 | |

__get_user_page()

1 | |

follow_page_mask()

1 | |

follow_page_pte()

对于大多数普通页来说follow_page_pte()会检查页不存在和页不可写入两种缺页异常,然后调用vm_normal_page()根据pte找到对应的页描述符page

1 | |

faultin_page()

fault_page()会把flags中的FOLL标志转为handle_mm_fault()使用的FAULT标志,然后调用handle_mm_fault()处理

1 | |

handle_mm_fault()

handle_mm_fault()这是一个包裹函数, 进行一个预处理后调用真正的处理函数__handle_mm_fault()

1 | |

__handle_mm_fault()

__handle_mm_fault()先进行一些简单的处理,

1 | |

1 | |

现在address的相关页表结构已经建立完毕,调用handle_pte_fault()处理PTE引起的异常,也就是要真正分配页框并设置PTE以建立完整的映射了

1 | |

handle_pte_fault()

- 这个函数也适合分配器函数,首先处理页不存在的情况,会衍生处三种处理

- 匿名映射区刚刚建立页表项造成PTE为none,调用do_anonymous_page()处理

- 文件映射区刚刚建立页表项造成PTE为none,调用do_fault()处理

1 | |